Code-lab

My half-baked wacky coding projects.

Work in progress and works that should have been progressed.

2020 | Snake City

How can phones be used to connect people physically, not digitally?

Snake.City is an urban game to be played anywhere with anybody. Players place thumbs either side of a mobile phone and in-turn connect with others. The game ends when any one player lets go. Go to snake.city to start the game.

We chose to make this game using web technologies to make sure this game runs on any device. We use web socket in the backend to detect the touches across multiple devices. This enables the players to jump in at any time quickly without having to install an app.

Collaboration with » Giulia Gualtieri, Gavin Wood

My Role » Front end development + Visual design

2020 | Donation.land

Online visual donation platform

This project aims to create a place online where people can stand together for a social cause. It also aims to update the ways that petitions and donations are gathered online, by providing payment infrastructure for organizations to successfully progress toward their goals.

Made with friends project : front end development ( p5.js + CSS )

2020 | Arena2Slides

Converting Are.na channels into cool slides

With my friends we made a service that converts your are.na channel into a slideshow to share with anyone! If you don't know Are.na, it's a visual organization tool like Pinterest but more cooler. I worked on the front end part of the product. Made in two days so it might be a bit buggy but check it out here

Made with friends project : front end development ( javascript + CSS )

2019~ | Google Slide to Web

Use Google Sheets to update your website

It was annoying for me to dive into HTML every time to refresh the news section. So I made sheet2news.js where you can use Google Sheet to update your website with a breeze. All the news updates below are from the Google Sheet. See the github repo for this here

2019~ | Is this violence? Am I too sexy?

What is violence and sexiness in the eye of Google's AI?

This game uses the algorithm behind Google’s safe search filter to return the rate of violence or sexiness on the incoming webcam footage. Player must trick the AI into thinking extreme violence or sexiness is in the picture. The game questions the implications of autonomous biased systems quantifying subjective notions such as violence. To be soon available on the AppStore if Apple allows it...Which I don't think so....

Solo project : From concept to development ( Unity C# )

2018 | LIKED it!

Facebook plugin that likes when you really, truly mean it

No more of those disingenuous likes! It only likes when I really smile. But then you start to wonder if something sinister like this is actually running on your laptop to collect data already...(scary)

Solo project : From concept to development ( processing + javascript )

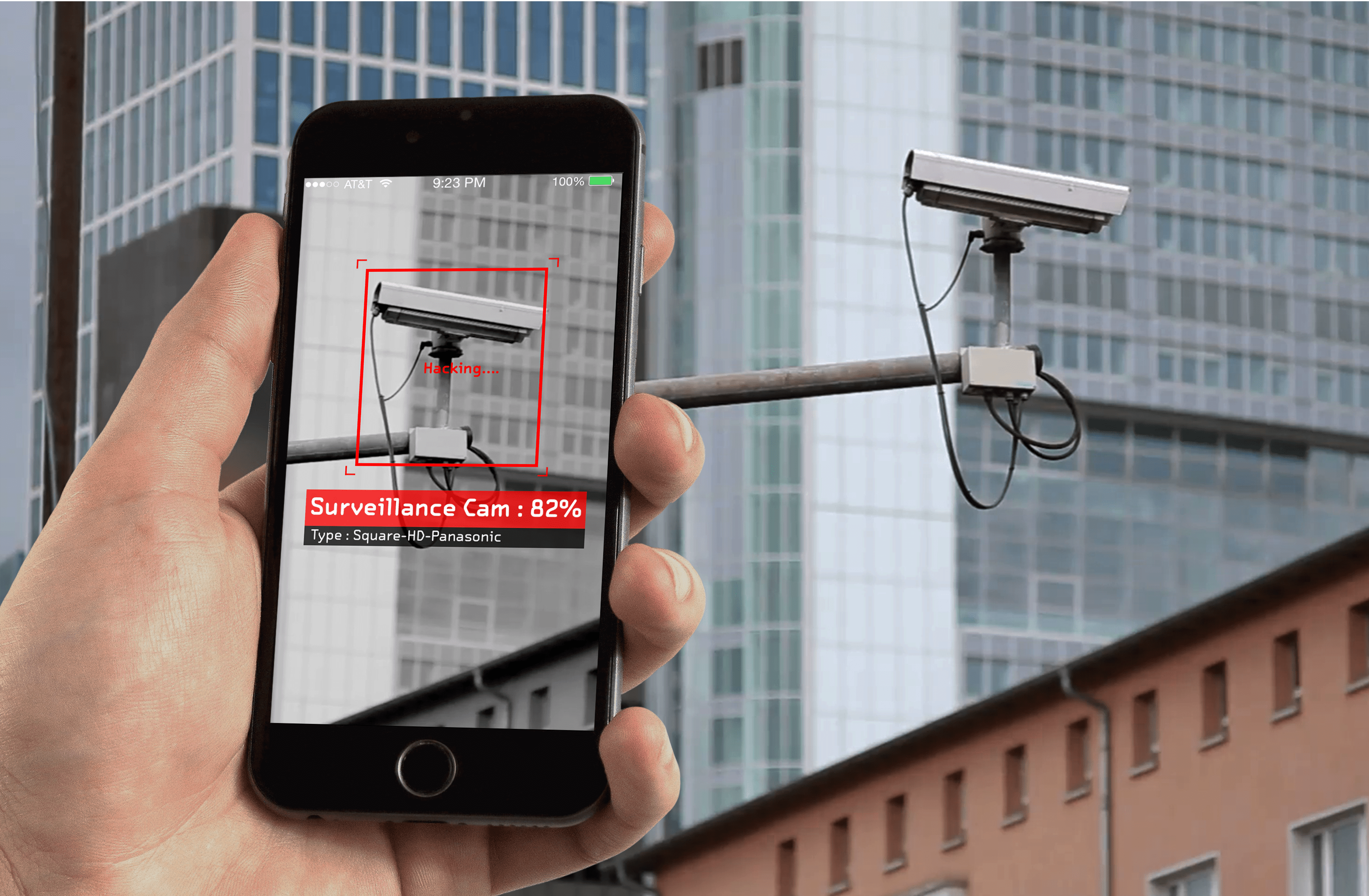

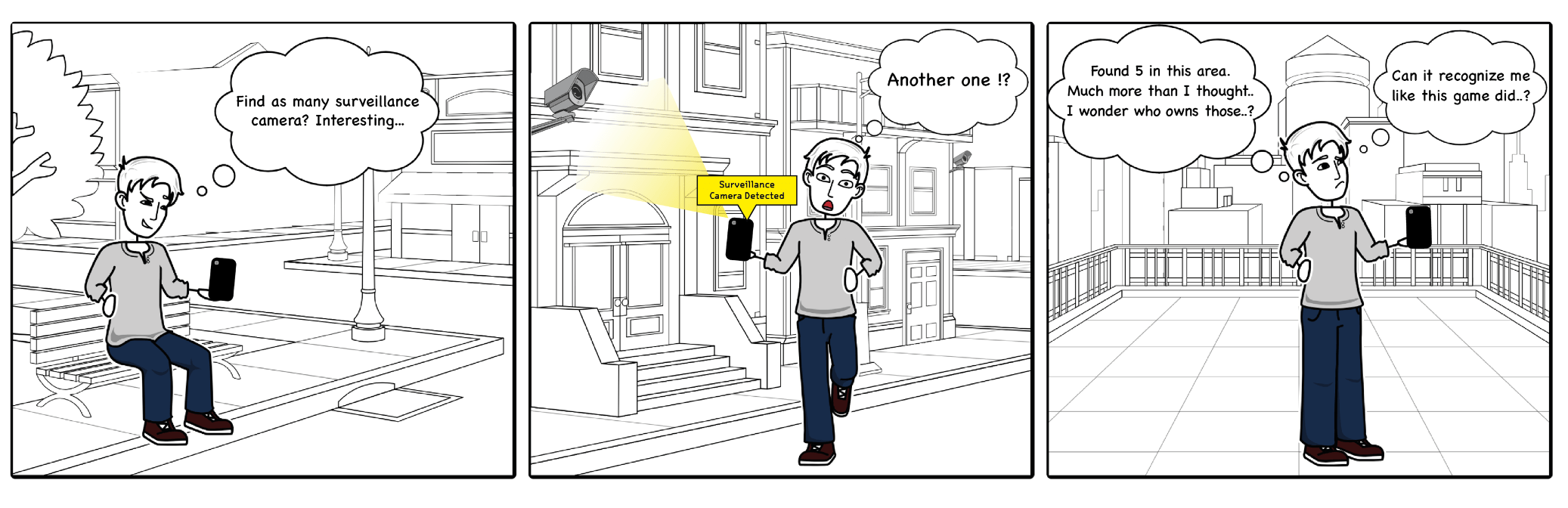

2018 | Surveillance Go

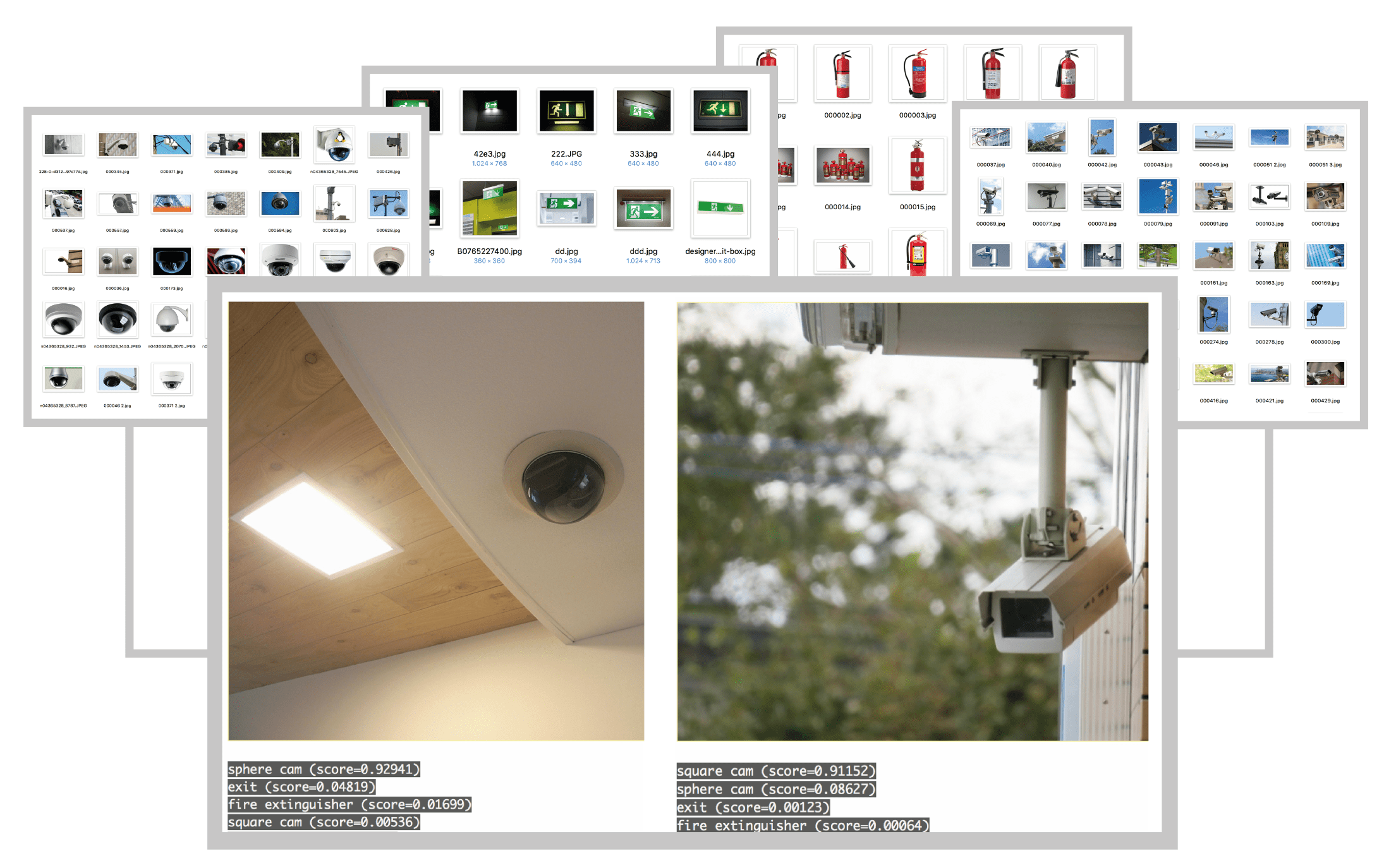

Training custom neural network model to detect surveillance cameras

What if we can train our own neural network to detect surveillance cameras that are watching us? With this question in mind, I started training neural networks model (tensorflow.js) with more than 1045 images of different surveillance cameras. Currently, the accuracy is still low. It needs more data. Do send me more pics of those surveillance cameras around you!

Try the prototype on the browser (Github)

Solo project : From concept to development ( HTML5 + python + tensorflow.js )

2018 | Using YOLO to make objects talk

AR+AI app that makes objects talk to each other

What do bananas feel everyday? How about beer bottles? We now know with this unique AR+AI app which I made in a day at the CIID machine learning course. It uses YOLO, an object recognition framework built on neural networks to detect the thing it is seeing. I created a XML list for the objects that it recognizes to build a playful dialogue between things.

In collaboration with : Salem Al-Mansoori

My part : I worked on the code part. ( openFrameworks + oFxDarknet + XML )

2016 | Voice to Text Bazooka

Bazooka that converts voice into texts for concerts

At the Academy for Programming and Art (BaPa), I developed an installation where your voice gets shot as 3D text objects on the screen. This was used in an actual concert for the pop idol group Rainbow Konkista Dolls in Tokyo.

In collaboration with : Shogo Tabuchi, Tsutomu Shiihara , Risa Horikoshi, Naho Yamaguchi,

My part : I worked on the entire code+engineering part. ( Unity C# )

2015 | Interactive AR Washing Mirror

Washing Mirror that teaches kids the proper way to wash hands

Interactive AR washing mirror to teach children the proper way to wash hands. One of the most effective way to prevent the spread of infections is to wash hands in the proper way. But most children tend to only rub their hands together, which will not sterilize the germs on the back of the hand and between the fingers. To meet this challenge we have developed an interactive washing mirror that makes washing hands fun whilst teaching children the proper way of washing hands.

In collaboration with : Yuta Takeuchi, Eri Nishihara, Katsufumi Matsui, Yuta Kato, Wataru Ito

My part : I worked on the hand detection algorithm (openFrameworks C++) and the movie

2013 | Chronobelt

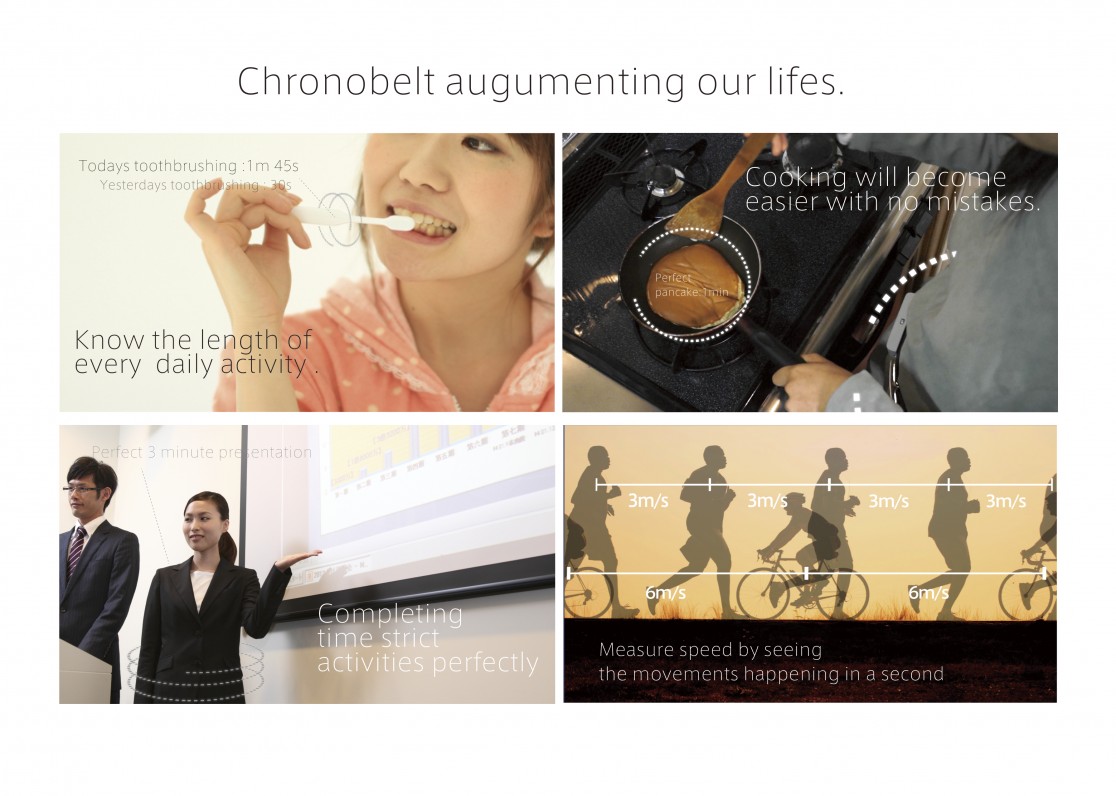

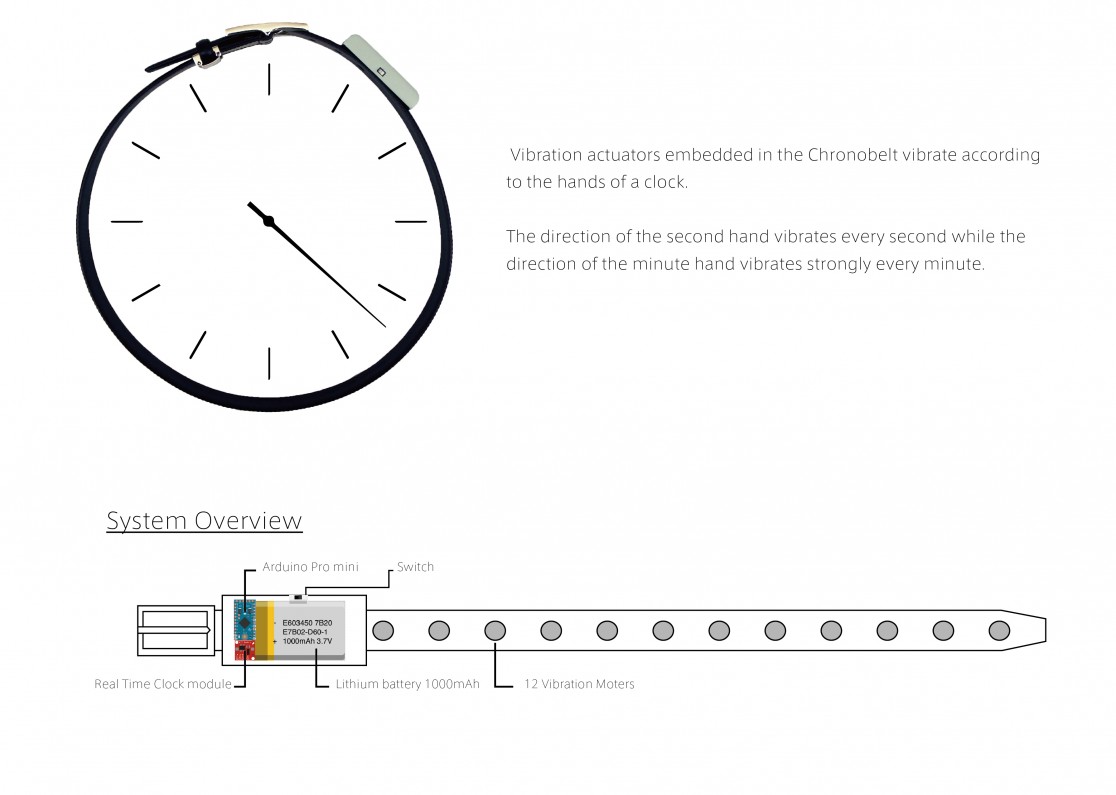

Wearable belt that enhances your time perception.

If we had the ability to tell time accurately by the second, what would it be like? To find this out, I made a wearable belt which has 12 vibration actuators embedded inside. These motors map the direction of the second hand of the clock around your waist. Without looking at the time, users can always understand the correct time from the location of the vibration around their body. By wearing this belt daily I could understand subjective time objectively, becoming aware of the length of every activity I do. I started to quantify my every actions such as the length I take to read a page, or how fast I was speaking. Wearing this belt for a long period of time may have changed my vague perception of time completely, but I developed a unique headache after 2 month and quit. Still, a cool idea, maybe might try again. Anyone interested in trying out?

Solo project : From concept to development ( 3D modelling in Rhinoceros + Arduino )

That's it folks. Thank you for reading all the way down here.

Stay a bit longer if you have time ;)